Death to the Prompt Engineer

#141 - LLMs don’t reward clever prompts. They reward clear thinking.

Hello friends, I hope you had a good week.

This post starts with a confession: I never bought the hype around prompt engineering. When everyone was rushing to build prompt libraries and swap “best practices” like they were trading Pokémon cards, I was already skeptical. It all felt a bit too much like a solution in search of a real problem.

The idea, if you remember, was simple and seductive: learning how to write better prompts would unlock better results from AI. If you phrased things right, magical outcomes would follow. If the model failed, the prompt was to blame.

I gave it a fair shot.

I spent time refining, structuring, role-playing, injecting few-shot examples, looking at examples on Twitter… you name it. And while I occasionally got a bump in output quality, I never found the returns to be linear. Actually, the more capable the models got, the less the prompt seemed to matter. What really moved the needle, at least for me, was something else entirely: context, iteration, and the willingness to work with the tool—not just toss a one-liner at it and hope for brilliance.

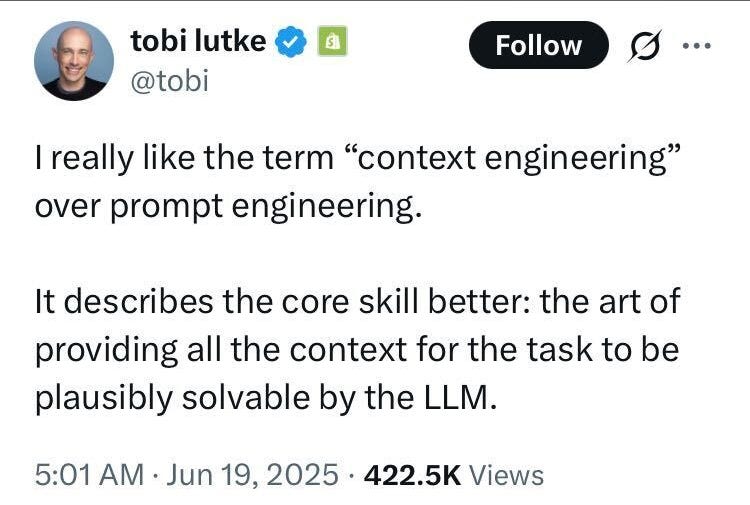

Tobi Lütke said it much better than I could:

This post is about that, it’s my contrarian take to general wisdom: Prompt Engineers are Dead before they were even born.

(I realize this is a bit of an overstatement, just sounds better in storytelling, I am told!).

The Prompt‑Engineering Hype

When ChatGPT exploded into public consciousness at the end of 2022, so did the idea of prompt engineering. It quickly became the tech equivalent of alchemy: a bit of magic, a bit of mystery, and apparently capable of turning basic models into oracles. Entire ecosystems sprang up almost overnight—bootcamps, LinkedIn influencers, “prompt marketplaces,” and even six-figure job postings. For a few months, it felt like prompt engineers were going to be the next data scientists.

The logic was appealing. These were black-box systems, and if the output was bad, you didn’t blame the model—you blamed the prompt. So people started building intricate “recipes”: role-play prompts, few-shot instructions, nested constraints. Some of them genuinely helped. But many were just noise. The signal-to-buzz ratio got out of hand.

More importantly, the hype ran ahead of the data. In practice, prompt engineering was often confused with something else entirely: being clear. OpenAI’s own research showed that while techniques like Chain-of-Thought prompting could improve results, the gains were often dwarfed by what happened when users gave the model more context or iterated over multiple turns. In coding benchmarks like HumanEval and MBPP, for example, conversational refinement led to significantly higher solution rates than initial prompt quality alone. And most of those “prompt libraries”? They aged poorly as models evolved.

And that’s what I actually saw in my personal experience with AI. I had spent hours fine-tuning prompts, and I kept running into the same problem: the return wasn’t linear. Better syntax helped a little, but rarely changed the outcome. What did make a difference—especially with more capable reasoning models—was breaking the problem down, giving background, responding to outputs, and shaping the task step-by-step. Talking to the model, not briefing it.

That shift—from prompt writing to iterative interaction—was the real unlock.

What Actually Drives Output Quality

What really opened my eyes was when I asked the model to become the prompt engineer.

I was working on a complex task and thought: instead of writing a better prompt myself, why not just ask the model to rewrite mine as if it were the expert? So I gave it a rough input—no fancy structure, just basic English—and asked it to improve it. The result? A clearer, more actionable prompt than anything I would have written solo.

That was the moment it clicked: prompt engineering wasn’t the skill—prompt negotiation was. In other words, it’s not about crafting the ideal prompt upfront, it’s about using the model as a co-pilot. The real power came from explaining what I needed in plain language, getting a version back, then reacting to it.

Iteration, not inspiration.

This is especially true with reasoning-capable models like GPT-4 or Claude Opus. These models are optimizing toward coherence and relevance across turns. So what matters most isn’t the phrasing of the first message—it’s the ongoing structure of the conversation. What’s the task? What are the constraints? What feedback are you giving as you go?

When you think about it, this isn’t so different from working with a junior team member. You don’t just give a perfect brief and walk away—you guide, adjust, clarify. You spot misinterpretations early and correct them. You refine your ask as the response takes shape. Good outputs aren’t the result of one-shot genius, but of interaction and feedback loops.

I stopped wasting energy perfecting prompts and started thinking in terms of context layers and iterations. Was I clear on the goal? Did I provide the necessary background? Was I responding to the model’s outputs with good signal?

That’s where quality lives—not in clever phrasing, but in managing the flow of the exchange.

The Rise of Context Engineering

This is why I really liked Tobi Lütke’s tweet. That line clarified something I had already started noticing. What consistently improved GenAI output wasn’t a better prompt—it was a better setup. The clearer the model’s environment, the better the result. What matters is context, not cleverness.

Context engineering means giving the model the scaffolding it needs to perform. That could be background information, goals, constraints, audience definitions, or examples. It’s the difference between saying “Write a job description” and “You’re writing a job description for a senior backend engineer in a fintech startup, hiring in Berlin, where clarity and speed matter more than playful tone. Here’s what we’ve used in the past.” Same task—totally different outcome.

And this is where GenAI starts to feel more like product design than prompt tinkering. You’re not writing one good line; you’re creating a working environment. You’re stacking relevant inputs in a way the model can process:

What’s the task?

What does “good” look like?

What context has already been shared?

What examples can be reused or avoided?

Iteration also builds trust. When you see that the model can pick up on subtleties, revise based on your feedback, and improve in real time, you stop treating it like a magic vending machine. You start treating it like a flexible tool. And your role shifts too—from “user” to “orchestrator.”

This dynamic is especially powerful when you’re working on complex or abstract tasks—like naming a product, exploring strategic options, or testing a narrative. You might start with a poor prompt, but by the fifth exchange, you’re aligned. And if you’ve kept the right context along the way, that alignment compounds.

What’s the Actual “Skill” in Using LLMs?

I do not want to sound like I am one of the “Experts” that tell you how to make AI outputs better, but I will tell you what works for me: the skill isn’t the prompt, it’s structured thinking.

The times that I have got the most value out of GenAI tend to be the ones where I spent time:

Defining problems clearly

Breaking them down into manageable parts

Identifying what’s missing

Giving feedback that actually moves the output forward

In short, spending time on how to frame work. And that’s a skill that predates AI. It’s what good managers do when scoping a project. What teachers do when guiding students through difficult topics. The AI makes that skill more visible—and more scalable.

This is probably why GenAI doesn’t always reward who you’d expect. It doesn’t care if you have a PhD or a copywriting background. It cares whether you can guide a conversation, stay on track, and know what “good” looks like when you see it.

And that’s also why I’m not worried about “prompt engineering” disappearing. If anything, we’ve just renamed it. It’s problem decomposition, feedback literacy, and a bit of interaction design. These aren’t niche technical abilities. They’re generalist superpowers.

There’s another piece, too: patience. Knowing that the first response will likely be wrong. That you’ll need to push back, restate things, maybe even change directions. That’s fine. You’re not trying to extract genius from a one-shot prompt. You’re building something together.

If we call this a new skill, then it’s closer to facilitation than engineering. You don’t need to be brilliant—you just need to be clear, iterative, and slightly obsessive about getting to a better outcome.

That’s a very human strength. And maybe that’s the real story here: the best users of GenAI aren’t magicians. They’re good collaborators.

Prompt engineering had its moment. It made sense in the early days—when models were simpler, outputs were brittle, and the right phrasing could mean the difference between gibberish and usefulness. But that moment has passed.

Today, the real leverage comes from context and iteration. From setting up the task clearly, layering in information, and being willing to go back and forth with the model until you get what you need. The prompt isn’t the product—the interaction is.

This shift favors people who can structure problems, adapt in real time, and know what “done” looks like. And it’s good news: you don’t need prompt libraries or special syntax. You just need clarity, patience, and a willingness to work with the system, not just throw commands at it.

Have a fantastic weekend!

Giovanni