Hello friends! I hope you’re doing well.

This week, I found myself stuck on a single, mind-bending line from Google’s launch memo for its new quantum computing chip, Willow: “Willow’s performance on this benchmark is astonishing: It performed a computation in under five minutes that would take one of today’s fastest supercomputers 10^25 (10 septillion) years. If you want to write it out, it’s 10,000,000,000,000,000,000,000,000 years.” I had to read it twice. 10 septillion years — several billion times longer than the age of the universe — shrunk down to five minutes. If that doesn’t stop you in your tracks, I’m not sure what will.

That sentence sent me down a rabbit hole on quantum mechanics, quantum computing, and something I never expected: the multiverse. I actually also re-read Carlo Rovelli’s “Seven Brief Lessons on Physics” to try and make sense of it. I don’t think I’ll be explaining quantum mechanics at dinner parties anytime soon, but I did come away with some clarity on how quantum computing works — and why Google’s announcement might actually be a big deal this time.

What the f**k is quantum mechanics, again?

Before we get to quantum computing, we need to spend a minute on quantum mechanics. I promise I’ll keep it simple because, frankly, that’s the only way I can understand it myself.

Here’s the basics: in our everyday world, things follow clear, predictable rules. If you drop a ball, it falls. If you flip a coin, it lands on heads or tails. But at the quantum level — the world of particles smaller than atoms — reality plays by a very different set of rules. Here, particles can be in two places at once, decisions aren’t made until you “look” at them, and the outcome of certain events isn’t fixed until it happens. If that sounds bizarre, welcome to the club.

The foundations of quantum mechanics were laid in the early 1900s when physicists realized that classical physics couldn’t explain everything. For instance, why do hot objects emit light in specific colors (like the glow of a metal rod in fire)? Classical physics couldn’t fully answer that. To solve it, Max Planck proposed in 1900 that energy isn’t continuous but comes in small packets called “quanta.” This idea kicked off a revolution.

Then came Albert Einstein, who used quantum principles to explain the photoelectric effect in 1905, showing that light behaves like particles (photons) in addition to waves. This discovery won him a Nobel Prize, even though he’s more famous for relativity. Niels Bohr later added his atomic model, where electrons orbit a nucleus in discrete energy levels (like planets around a sun, but with very strict “parking spots”). But things got weirder with Werner Heisenberg, who in 1927 introduced the Uncertainty Principle, which says you can’t precisely know both a particle’s position and momentum at the same time.

But the biggest conceptual leap came from Erwin Schrödinger, who gave us his famous “Schrödinger’s cat” thought experiment — the cat in a box that’s both alive and dead until observed. Schrödinger’s equation became a cornerstone of quantum mechanics, describing how particles change and evolve over time.

Why It Matters

Quantum mechanics underpins a huge part of modern physics and everyday technology. Without it, we wouldn’t have transistors (which power every computer chip), lasers, or MRI machines. It also plays a key role in understanding fundamental particles in physics, like electrons, photons, and quarks. If classical physics is the rulebook for “big objects,” then quantum mechanics is the rulebook for the very small. Without it, we wouldn’t be able to explain the structure of atoms, the stability of matter, or the properties of light.

A core concept of Quantum Mechanics is the double-slit experiment, where particles act like waves when you’re not watching, but suddenly behave like particles when you do. It’s like having a cat that meows when you leave the room but starts barking as soon as you peek inside. Physicists call this wave-particle duality, and it’s one of the cornerstones of quantum mechanics.

Another wild concept is superposition, which is a fancy way of saying that, at a quantum level, things can exist in multiple states at once. Schrödinger’s cat is the classic analogy: a cat in a box that is both alive and dead until you open it. It’s a thought experiment, but it perfectly illustrates the strangeness of quantum reality.

What the f**k is Quantum Computing, again?

Alright, so we’ve got a rough idea of quantum mechanics — particles that can be in multiple states at once, and the whole “reality changes when you look at it” thing. Now, let’s see how quantum computing fits into this.

A regular computer processes information using bits. A bit can be either a 1 or a 0, like a light switch that’s either on or off. Everything your computer does, from loading Netflix to running Excel, is just millions (actually billions) of these bits flipping on and off.

A quantum computer doesn’t play by those rules. It uses qubits (quantum bits) instead. The trick with qubits is that, thanks to superposition, they can be both 1 and 0 at the same time. If a normal bit is like a coin that lands on heads or tails, a qubit is like a coin that’s spinning in the air, being both heads and tails at once until it lands. This allows quantum computers to process many possibilities at the same time, thus increasing the theoretical computing power.

But it doesn’t stop there. Qubits can also be entangled, meaning that when you change the state of one qubit, it instantly affects another, no matter how far apart they are. If this sounds like magic, you’re not alone — Einstein famously called this “spooky action at a distance.” This entanglement is what allows quantum computers to “coordinate” qubits in a way that exponentially increases their power.

Why is this useful? Because it allows a quantum computer to test multiple possibilities at once, while a classical computer has to check them one by one. If you imagine a maze, a normal computer walks through every path until it finds the exit. A quantum computer explores every path simultaneously. This is why quantum computing is seen as a “cheat code” for certain types of problems, like factoring large numbers (hello, Bitcoin cryptography) or optimizing complex systems (like global supply chains).

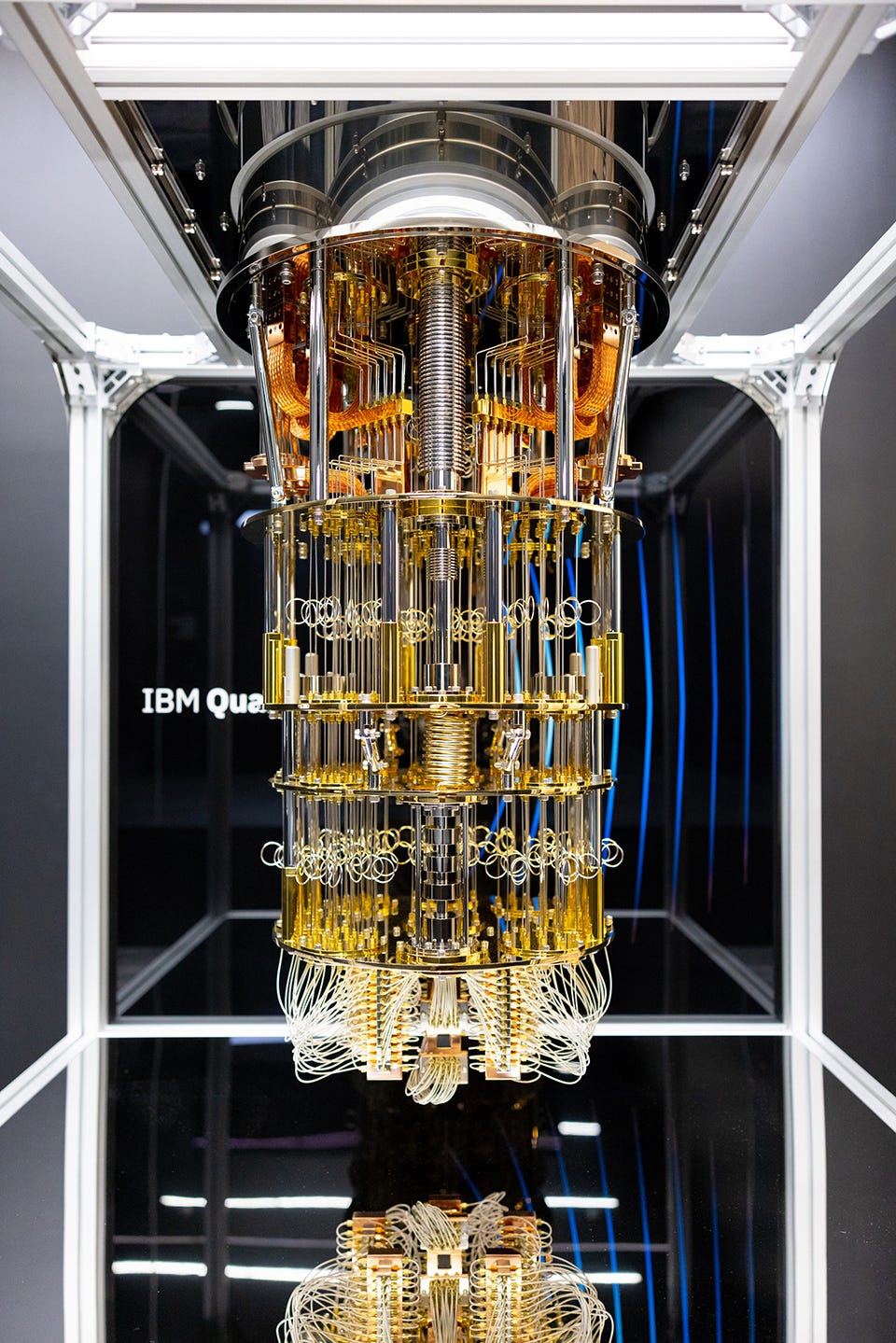

One last thing: these machines don’t look like regular laptops. Quantum processors are so fragile that they have to be stored in dilution refrigerators at near absolute zero (-273°C). The chip sits at the bottom, connected by thousands of wires to control systems at room temperature. It looks more like a sci-fi art installation than a computer.

Why Has Quantum Computing Been “On the Brink” For So Long?

I have followed quantum computing for a long time in the news, and it feels (not differently to AI, before the ChatGPT breakthrough) “right around the corner” for decades. Every so often, there’s a breakthrough announcement — like Google’s 2019 claim of “quantum supremacy” — but then things seem to go quiet again. So, what’s holding it back?

The short answer: it’s unbelievably hard. The long answer is a mix of physics, engineering, and the fact that reality doesn’t like being bent. Here’s why.

1. Qubits Are Very Fragile

Qubits aren’t like traditional silicon chips that can handle the heat of your laptop. They need to be isolated from everything: heat, vibration, sound, cosmic rays — even a stray photon can mess things up. This is why quantum computers are stored in dilution refrigerators at temperatures close to absolute zero (-273°C). At these temperatures, particles slow down enough to maintain their quantum properties. The moment anything “touches” a qubit, it collapses into a normal bit (like that spinning coin finally landing on heads or tails). This makes the environment for qubits incredibly difficult (and expensive) to maintain.

2. Error Correction is a Nightmare

Even if you can keep qubits stable, they’re still error-prone. In classical computers, if a bit flips from 1 to 0 due to a power surge, you have error correction software that catches it and fixes it. Quantum error correction is a whole other beast. Since you can’t “observe” a qubit without collapsing it, how do you know when something went wrong?

The answer is to create “redundant qubits” that work together to spot errors without observing them directly. This requires exponentially more qubits just to make sure your original qubit is working correctly. It’s like needing 10 people to monitor a single person’s pulse — and each of those people needs their own monitoring system. Google’s Willow chip claims to have made strides here by reducing errors as they scale up the system, which might finally be the breakthrough we’ve been waiting for.

3. Scaling is Exponentially Harder

Building a quantum computer with 2 qubits is relatively easy. Building one with 4 qubits? Harder. 8 qubits? A nightmare. 100 qubits? Welcome to quantum hell. Each new qubit requires exponentially more control, synchronization, and error correction. Scaling from 1 to 100 qubits is not like scaling from 1 to 100 normal computer processors. It’s more like building 100 perfectly synchronized orchestras, where every musician is also a quantum particle that refuses to play along unless it’s at absolute zero.

And yet, scaling is essential. To unlock quantum’s real power, you need a lot of qubits working together. Google’s Willow chip is one of the first chips that aims to do this while also handling error correction. If they succeed, we might finally move past the “demo” stage of quantum computing.

4. The Use Cases Aren’t Obvious (Yet)

For years, people have said quantum computing will “change everything,” but they rarely specify what it will change. Unlike classical computing, where use cases were clear (faster spreadsheets, better games, cooler iPhones), quantum computing isn’t useful for everyday tasks. Its real power lies in specific problems that classical computers struggle with:

• Optimization: Finding the most efficient route for logistics or supply chains.

• Cryptography: Cracking codes, like the RSA encryption that protects Bitcoin and bank transactions.

• Molecular Simulation: Simulating the behavior of molecules for drug discovery.

These are important problems, but they aren’t things you’ll notice on your phone. Quantum computing isn’t going to make Netflix faster or your TikTok feed smarter. It’s aimed at “big, hard problems” like optimizing global supply chains or revolutionizing pharmaceutical research.

Why This Time Feels Different (Enter Google’s Willow)

Every few years, there’s a “breakthrough moment” in quantum computing. Google claimed “quantum supremacy” in 2019, but it was later criticized because the problem their quantum computer solved wasn’t particularly useful. This time, however, the breakthrough feels bigger. Google’s Willow chip is a step toward solving the scaling problem (more qubits) and the error correction problem (fewer mistakes as you scale).

If this progress continues, quantum computing may finally graduate from “concept” to “tool.” And when that happens, expect to hear a lot more about cryptography, drug discovery, and (of course) the multiverse.

Every new technology promises to “change everything,” but most of them don’t. Quantum computing is one of the rare cases where that promise might actually hold up. Google’s Willow chip isn’t just a faster computer — it’s a potential shift in how we solve certain problems. Problems that, until now, were considered unsolvable.

1. It Could Break Modern Cryptography (Yes, Even Bitcoin)

If you’ve ever heard that quantum computers will “break the internet,” this is what people are talking about. Most of today’s encryption — the kind that protects your bank details, Bitcoin wallets, and online passwords — relies on how hard it is to factor large prime numbers. A normal computer would take millions of years to crack a 2048-bit RSA encryption key. But a quantum computer? It could do it in hours, maybe minutes.

This is thanks to Shor’s Algorithm, a method that only works on quantum computers. The moment a large-scale quantum computer becomes operational, most of today’s encryption methods will become obsolete. Banks, tech companies, and governments know this, which is why there’s already a push for post-quantum cryptography — new encryption methods that quantum computers can’t break. Bitcoin’s cryptography, for example, would need to be updated or risk collapse.

2. It Could Transform Drug Discovery

Discovering new drugs takes years of trial and error but this could really change, because Molecules are quantum systems. They behave according to the rules of quantum mechanics, which makes them notoriously hard to simulate with classical computers. But since quantum computers operate on quantum principles, they can “speak the same language” as molecules. Google’s Willow chip has the potential to model molecular interactions faster and more accurately than any supercomputer. This could lead to faster drug discovery, more precise cancer treatments, and even better materials for batteries.

Companies like QPharmetra and IBM are already working on quantum simulations for molecules. If successful, quantum computing could turn drug discovery from a “years-long process” into something much faster and more targeted.

3. It Could Revolutionize Supply Chains

Optimization problems are the bread and butter of quantum computing. Figuring out the best route for 1 truck is easy. But figuring out the most efficient routes for 1,000 trucks, planes, and cargo ships, all while managing delays and inventory changes? That’s where things get tricky.

Classical computers use brute force to solve these kinds of “combinatorial optimization” problems, often settling for “good enough” solutions. Quantum computers, however, can process multiple possible routes at once, finding more optimal solutions faster.

4. It Could Unlock Climate Models We Can’t Run Today

Climate models are basically giant computer simulations that predict weather, sea levels, and climate changes. The better the model, the more accurate the prediction. But current climate models are limited by classical computing power. If you’ve ever wondered why climate change predictions have such a wide range of possible outcomes, this is part of the reason.

Quantum computers could change that. By modeling weather systems, fluid dynamics, and atmospheric changes in parallel (thanks to superposition), they could generate more precise forecasts in less time. That means earlier hurricane warnings, better flood predictions, and smarter disaster preparedness. This could save lives.

The Multiverse Theory and Quantum Computing

In the announcement, the founder of Google Quantum AI, Hartmut Neven, wrote that that Willow’s performance was so powerful that it “had to have 'borrowed' the computation from parallel universes.” The chip is so powerful that it implies the existence of the multiverse.

By the way, this is Hartmut Neven…

What does any of this have to do with the multiverse? It’s a fair question. It’s not every day that a Google press release casually mentions “parallel universes” as if it’s just another bullet point. But there’s actually a good reason why the multiverse comes up when talking about quantum computing.

The idea comes from a physicist named David Deutsch, one of the pioneers of quantum computing. He’s also one of the strongest proponents of the “Many-Worlds Interpretation” of quantum mechanics. According to this view, every time a quantum event occurs — like a particle “deciding” to be in one state or another — the universe splits into two versions, one where the particle takes option A and one where it takes option B. While it sounds like sci-fi, it’s one of the leading interpretations in modern physics.

How Does This Relate to Quantum Computing?

To understand the connection, you need to think about how a quantum computer works. When a normal computer runs a calculation, it follows a single path: check option 1, option 2, option 3, and so on, one after another. But in a quantum computer, thanks to superposition, it doesn’t check the options one-by-one. It checks all of them at the same time.

So where does the multiverse fit in? According to David Deutsch’s view, each of these simultaneous possibilities could be playing out in a “parallel universe.” Imagine you’re solving a Sudoku puzzle. A classical computer would try every possible solution one by one. A quantum computer, in theory, would explore all possible Sudoku grids at once, each one in a “parallel world,” and then pick the right solution. From this perspective, quantum computers aren’t “faster” in the traditional sense. They’re calling on parallel universes for help.

To be clear, not every physicist agrees with this interpretation. The Many-Worlds Interpretation is one of several competing views of quantum mechanics, and you don’t have to believe in literal parallel universes to believe in quantum computing. But as Google’s launch memo hints, the mathematics behind quantum computing is consistent with the idea of multiple parallel worlds working in sync.

Is the Multiverse Real or Just a Useful Metaphor?

This is one of the biggest debates in modern physics. The multiverse idea sounds like science fiction, and for many physicists, that’s exactly what it is: a mathematical tool that just happens to be useful for explaining how quantum systems behave. Others, like David Deutsch, believe the multiverse is literal — that there really are parallel universes playing out next to ours.

What Does This Mean for Us?

For most of us, it doesn’t change much. You’re not going to “visit” another universe anytime soon. But it does change how we think about reality. If quantum computing is tapping into a multiverse, it forces us to reconsider the nature of existence itself. And while most of us don’t need to worry about the theoretical implications, it’s fascinating to think that the next big computing breakthrough could depend on “borrowing from other universes.”

Where Does This Leave Us?

Quantum computing isn’t something you’ll see in your phone next year. But it is something definitely worth following… according to everything I have read this weeks for the first time, quantum computing feels like it’s not just around the corner — it’s at the door.

Wish you a fantastic weekend, and joyful and relaxing holidays!

Giovanni

Book in a Tweet

Seven Brief Lessons on Physics - Carlo Rovelli

I am a huge fan of Rovelli, he has an incredible talent of making very complicated topics super engaging to read. Short but very fascinating book!

Giovanni, thank you for explaining the actual uses. I watched the Willow announcement and wondered of the practical applications.

Multiverse is fascinating. I think of it as if the computer doesn't calculate anything, but simply looks up the answer in the answer sheet. Because in the multiverse this has happened and all answers are known.